The healthcare industry stands at a critical juncture where artificial intelligence promises revolutionary advancements in medical imaging diagnostics, yet a formidable barrier persists: the data silo dilemma. Hospitals and research institutions worldwide possess vast repositories of medical images, but privacy regulations, competitive interests, and technical challenges keep these valuable datasets isolated. This fragmentation severely limits the potential of AI models that thrive on large, diverse datasets for accurate diagnosis and pattern recognition.

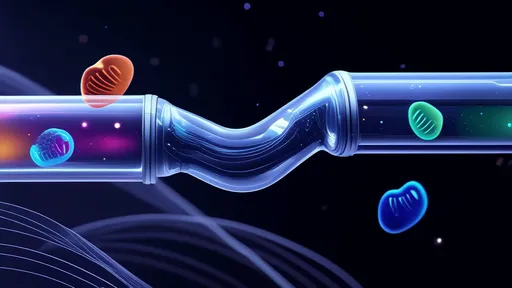

Enter federated learning—a groundbreaking approach that is redefining how we train AI models without moving sensitive data. Rather than consolidating information from multiple sources into a central server, this technique brings the model to the data. In medical imaging, this means that hospitals can collaboratively improve diagnostic algorithms while keeping patient scans securely within their own firewalls. The model travels between institutions, learning from each dataset locally and aggregating insights without ever exposing raw images.

The implications for breaking down data silos in healthcare are profound. For decades, medical AI development has been hampered by the inability to access sufficient training data across diverse populations and conditions. Smaller healthcare providers particularly struggle to develop robust models with their limited datasets. Federated learning creates an equitable playing field where even rural hospitals can contribute to and benefit from collective intelligence without compromising patient confidentiality or institutional sovereignty.

Technical implementation requires sophisticated coordination. The process begins with a central server initializing a base model—perhaps designed to detect tumors in MRI scans or identify fractures in X-rays. This model is distributed to participating healthcare institutions where it trains locally on their proprietary image datasets. After training, instead of sending sensitive patient data, each institution returns only the model updates—the learned parameters and weights—to the central server. These updates are then aggregated to create an improved global model that has learned from all participants while maintaining data privacy.

Security measures form the bedrock of successful federated learning systems in healthcare. Advanced encryption techniques like homomorphic encryption allow computations to be performed on encrypted data without decryption, ensuring that even model updates remain protected during transmission. Differential privacy adds another layer of security by introducing mathematical noise to the learning process, making it impossible to reverse-engineer individual patient information from the model updates. These cryptographic safeguards make federated learning not just convenient but fundamentally more secure than traditional centralized approaches.

The clinical benefits extend beyond privacy preservation. By enabling collaboration across diverse populations and healthcare systems, federated learning helps create more robust and generalizable AI models. A model trained on data from multiple continents will better recognize diseases across different ethnicities, age groups, and imaging equipment variations. This diversity significantly reduces bias in AI diagnostics and improves accuracy for underrepresented populations that have historically been excluded from medical AI development due to data scarcity.

Real-world applications already demonstrate remarkable success. Several international collaborations have used federated learning to develop AI models for detecting COVID-19 in chest CT scans, brain tumors in MRI images, and diabetic retinopathy in retinal scans. In each case, institutions across different countries and healthcare systems contributed their data without sharing patient images. The resulting models consistently outperform those trained on single-institution datasets while maintaining strict privacy standards that satisfy even the most stringent regulations like HIPAA and GDPR.

Despite its promise, federated learning faces significant challenges in medical imaging applications. The heterogeneity of data across institutions—variations in imaging protocols, equipment manufacturers, and annotation standards—can complicate model convergence. Communication efficiency remains another hurdle, as transferring model updates between numerous institutions requires substantial bandwidth. Researchers are developing innovative solutions including transfer learning techniques to handle data heterogeneity and compression algorithms to reduce communication overhead.

The future evolution of federated learning in medical imaging points toward even more sophisticated approaches. Personalized federated learning adapts global models to individual institutional characteristics while maintaining collaborative benefits. Cross-silo federated learning extends beyond hospitals to include pharmaceutical companies, research laboratories, and medical device manufacturers. The integration of blockchain technology offers transparent and auditable model training processes that further enhance trust in these collaborative systems.

Regulatory bodies and healthcare organizations are increasingly recognizing federated learning as a viable path forward. The U.S. Food and Drug Administration has begun creating frameworks for evaluating AI models developed through federated approaches. International consortia are establishing standards for interoperability, security protocols, and validation methodologies. This regulatory maturation will accelerate adoption across the healthcare industry and potentially expand to other sensitive domains beyond medical imaging.

As federated learning continues to evolve, it represents more than just a technical solution—it embodies a paradigm shift in how we approach collaborative AI in sensitive domains. By enabling knowledge sharing while respecting privacy boundaries, this approach aligns technological progress with ethical imperatives. For medical imaging diagnostics, where lives depend on both accuracy and confidentiality, federated learning offers a path to unlock the full potential of AI without compromising the trust that forms the foundation of healthcare.

By /Aug 26, 2025

By /Aug 15, 2025

By /Aug 26, 2025

By /Aug 26, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 26, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 26, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025